CBC creates Web-based, CAPI, or paper-and-pencil interviews. CBC comes with a 50 variable fields system, that provides the capability of asking up to 50 other standard survey questions (e.g. numerics, open-ends, check-box). One can purchase additional capacity for standard survey questions by purchasing a larger Lighthouse Studio license.

The Choice Question

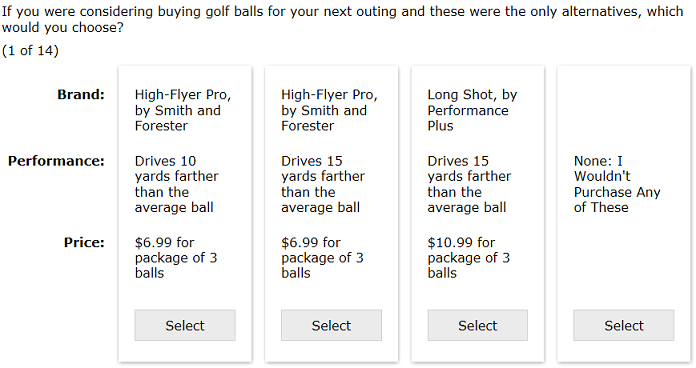

A CBC question is often referred to as a "task." A set of products (concepts) are displayed on the screen, and in the standard formatting the respondent chooses among the concepts. For example:

Choosing the best concept among a set of concepts is the standard approach that is advocated by most experts in the industry. Other options supported by the CBC software include:

•Constant-sum (allocation), where respondents type a number beneath concepts based on relative preference, percent of volume, or volume

•Best-Worst CBC (respondent selects best and worst concepts within each task--requires Advanced Design Module license)

•Another format that is gaining popularity is to ask about the "none" concept in a second-stage question, called Dual-Response None.

Two important decisions to make when constructing choice tasks are 1) how many concepts to present per task, and 2) how many total tasks to ask.

From a statistical viewpoint, choice tasks are not a very efficient way to learn about preferences. Respondents evaluate multiple concepts, but only tell us about the one they prefer (in traditional discrete choice). We don't learn how strong that preference is relative to the other product concepts. Showing more product concepts per screen increases the information content of each task, though it increases task difficulty. Research has shown that respondents are quite efficient at processing information about many concepts. It takes respondents considerably less than twice as long to answer choice tasks with four concepts than with two concepts. Research has also pointed out the value of forcing at least some levels within attributes to repeat within each choice task (level overlap). This can be accomplished by showing more products on the screen than levels available per attribute. In general, for marketing applications, we recommend showing around three to six concepts per task. However, there may be instances (e.g. a beverage study with 25 brands plus price), where showing more product concepts per screen is appropriate and more realistically portrays the actual buying situation. With the Advanced Design Module for CBC, you can display up to 100 product graphics on the screen, presented as if they were resting on "store shelves."

It is worth noting that some choice contexts (such as healthcare outcomes) involve challenging and often unfamiliar choice scenarios and researchers in this area have sometimes found that asking respondents to evaluate more than two concepts within a choice task is inordinately difficult. When a large proportion of the respondents are expected to be completing the CBC survey on mobile devices with relatively small screens, this also calls for showing perhaps just two or three product concepts per task.

With randomized choice designs, given a large enough sample size, we could model preferences at the aggregate level by asking each respondent just one choice task. In practice, researchers recognize that individuals are expensive to interview and that it makes sense to collect more information from each person in CBC studies. With multiple observations per respondent, one can model across-respondent heterogeneity in preferences, which leads to more accurate choice simulators.

In a 1996 meta-analysis of 21 CBC data sets, we found that multiple observations per respondent are quite valuable and that respondents could reliably answer up to at least 20 questions, and perhaps even more. However, we discovered that respondents process earlier tasks differently from later questions. Respondents paid more attention to brand in the first tasks and increased their focus on price in later questions. (See Johnson and Orme's article entitled "How Many Questions Should You Ask in Choice-Based Conjoint?" available for downloading from the Technical Papers section of our home page: http://www.sawtoothsoftware.com/support/technical-papers).

Over the last fifteen years, other researchers have examined the same issues and have suggested that respondents (especially internet and panel respondents) are probably less patient and conscientious with long CBC surveys involving many attributes than respondents of 25 years ago. They are perhaps quicker to resort to simplification heuristics to navigate complex CBC tasks and responses beyond about the tenth task don't seem to be revealing much more about each respondent's choice process. These authors have recommended using fewer than a dozen tasks, even if the attribute list is relatively long. They have recommended meeting the information requirements involved with long attribute lists by increasing the sample size.

With CAPI or paper-based CBC research, we recommend asking somewhere in the range of 8 to 15 choice tasks. With Web and mobile interviewing, fewer tasks might be appropriate if there is an opportunity for increased sample sizes (which often is the case). If estimating individual-level utilities using CBC/HB, we'd recommend at least six choice tasks to achieve good results based on simulated shares, but about 10 choice tasks or more for developing robust predictions at the individual level (again assuming a typical design). Before finalizing the number of concepts or tasks to be asked, we urge you to pretest the questionnaire with real respondents to make sure the questionnaire is not too long or overly complex.

With CBC, all of the tasks are tied to the same layout, including header text and footer text. You cannot change the number of product concepts or other settings within your choice tasks midstream (though you can include multiple CBC exercises with different characteristics within the same study, which can be interwoven). In addition to the regular choice tasks, you can add tasks that have fixed designs ("holdout" tasks) described later. You can also insert other text screens or generic survey questions between choice tasks.

The "None" Option

Choice-based conjoint questions can be designed to have a "None" option, sometimes referred to as the constant alternative. It is argued that the None option in CBC tasks better mimics the real world, since buyers are not required to choose products that don't satisfy them. The None option can also be used to reflect a status quo choice, such as "I'd continue to use my current long-distance service provider."

If the None option is present, a separate utility weight is computed for the None parameter (under CBC/HB, latent class, or logit analysis). The more likely respondents are to choose None relative to product concepts, the higher the utility of the None option. The None parameter can be used in market simulations to estimate the proportion of respondents that would not choose any of the simulated product concepts. You can include more than one constant alternative in the questionnaire if using the CBC Advanced Design Module.

In general, we recommend including None in CBC questionnaires, but paying less attention to (or completely ignoring) it in market simulations. The propensity to choose None can be a telling measure when comparing groups or individuals. We suggest that percent of Nones in Counts or simulations be viewed in a relative rather than an absolute sense.

The use of the "None" concept in partial-profile CBC studies (only supported by the Advanced Design Module for CBC) is problematic. The None weight varies significantly depending on how many attributes are displayed in the partial-profile task. Patterson and Chrzan (2003) showed that as the number of attributes increases, the propensity to choose None also increases.

Randomized Versus Fixed Designs

Two schools of thought have developed about how to design and carry out choice-based conjoint studies.

1) Some researchers prefer fixed orthogonal designs. Such designs often employ a single version of the questionnaire that is seen by all respondents, although sometimes respondents are divided randomly into groups, with different groups receiving different questionnaire versions (blocks, or subsets of the larger fixed design). Fixed orthogonal designs have the advantage of high efficiency at measuring main effects and the particular interactions for which they are designed. It is interesting to note that for imbalanced asymmetric designs (where there are large differences in the numbers of levels among attributes) fixed orthogonal designs often can be less efficient than random designs.

2) Other researchers, particularly those accustomed to computer-administered interviewing, prefer controlled random designs. The CBC software, for example, can design interviews with nearly-orthogonal and level-balanced designs, in which each respondent receives a unique set of questions. Attribute and concept order can be randomized across respondents. Such designs are often slightly less efficient than truly orthogonal designs (but can be more efficient with asymmetric designs), but they have the offsetting advantage that all interactions can be measured, whether or not they are recognized as important at the time the study is designed. Randomized plans also reduce biases due to order and learning effects, relative to fixed plans.

CBC can administer either fixed, random designs, or designs generated from an outside source (via the ability to import a design from a .csv file). If a fixed design is chosen, then the researcher must specify that design (usually by importing it). If a randomized design is chosen, then it will be produced automatically and saved to a design file that is later uploaded to your server if using Web-based data collection, or to another device if using CAPI-based interviewing. If using paper-based interviewing, a limited set of questionnaire versions (a subset of the potential random plan) is often used. Most CBC users have favored the ease of implementation and robust characteristics of the randomized approach.

We have tried to make CBC as easy to use and automatic as possible. The researcher must decide on the appropriate attributes and their levels and compose whatever explanatory text and/or generic survey questions are desired during the interview. Absolutely no "programming" is involved. Every aspect of designing the interviews and conducting the analysis is managed through the point-and-click Windows interface. Thus, we hope that CBC will make choice-based conjoint analysis accessible to individuals and organizations who may not have the statistical or programming expertise that would otherwise be required to design and carry out such studies.

Random Design Strategies

Any experimental design from any source may be imported from a .CSV file into CBC, but most users will probably rely on one of the four randomized design options. When CBC constructs tasks using its controlled randomization strategies, some efficiency is often sacrificed compared to strictly orthogonal designs of fixed tasks. But, any loss of efficiency is quite small, usually in the range of 5 to 10%. However, there are important compensating benefits: over a large sample of respondents, so many different combinations occur that random designs can be robust in the estimation of all effects, rather than just those anticipated to be of interest when the study is undertaken. Also, potential biases from learning and order effects can be reduced.

The earliest versions of CBC offered two randomized design options: complete enumeration and the shortcut method. Though we refer to these as "randomized designs," these designs are chosen very carefully, as will be explained.

The complete enumeration and shortcut methods are heuristic design algorithms that generate designs conforming to the following principles:

Minimal Overlap: Each attribute level is shown as few times as possible in a single task. If an attribute's number of levels is equal to the number of product concepts in a task, each level is shown exactly once.

Level Balance: Each level of an attribute is shown approximately an equal number of times.

Orthogonality: Attribute levels are chosen independently of other attribute levels, so that each attribute level's effect (utility) may be measured independently of all other effects.

Assuming that respondents evaluate CBC questionnaires using an additive compensatory rule consistent with random utility theory, minimal overlap has benefits from a statistical standpoint in terms of increasing precision for main effects; but it is suboptimal for measurement of interactions between attributes.

Many if not most respondents do not adhere to the additive compensatory rule. When that's the case, some degree of level overlap (repeating levels within a choice task) can lead to more thoughtful responses that reveal deeper preference information than minimal overlap. To help illustrate that point, consider the extreme case of a respondent who requires Brand A. If only one Brand A is available per task, the respondent's only option is to choose Brand A each time. Such a respondent can answer CBC questions like these in a matter of a few seconds, without revealing at all his/her preference for the remaining attributes. But, if two Brand A products sometimes appear in the design, then the respondent is encouraged to ponder and express what additional aspects (other than brand) affect the decision.

Level overlap can be added to designs simply by increasing the number of concepts per task. For example, if each attribute has four levels, then showing six concepts per task means that two levels must be repeated in each choice task. Six concepts per task also provides more raw information for estimating part-worth utilities than four concepts per task. (Yet another reason to advocate showing more rather than fewer concepts per task.)

Another way to add level overlap within your designs is to use the Balanced Overlap or purely Random methods.

Below, we describe the design methods in more detail and conclude with more advice on when to use the different design approaches.

Complete Enumeration:

The complete enumeration strategy considers all possible concepts (except those indicated as prohibited) and chooses each one so as to produce the most nearly orthogonal design for each respondent, in terms of main effects. The concepts within each task are also kept as different as possible (minimal overlap); if an attribute has at least as many levels as the number of concepts in a task, then it is unlikely that any of its levels will appear more than once in any task.

Complete enumeration may require that a very large number of concepts be evaluated to construct each task, and this can pose a daunting processing job for the computer. The base CBC software permits up to 10 attributes, with up to 15 levels each. Suppose there were 4 concepts per task. At those limits the number of possible concepts to be evaluated before displaying each task would be 4 x 15^10 = 2,306,601,562,500!

This is far too great a burden for even the fastest computers today and would take a great deal of time for the design generator to produce a design file. Generally, CBC design specifications in practice are more manageable and speed of computation is usually not an issue. Furthermore, with CBC, the researcher generates the experimental plan prior to conducting data collection. Thus, respondents do not need to wait for a new design to be generated--they are simply randomly assigned to receive one of the many questionnaire versions previously generated.

The time required to compute designs under Complete Enumeration is more sensitive to the number of attributes in your study than to the number of levels for attributes. If you have just a few attributes, you should experience little delay in design computation, even if one of your attributes has scores of levels.

Shortcut Method:

The faster "shortcut" strategy makes a much simpler computation. It attempts to build each concept by choosing attribute levels used least frequently in previous concepts for that respondent. Unlike complete enumeration that keeps track of co-occurrences of all pairs of attribute levels, the shortcut strategy considers attributes one-at-a-time. If two or more levels of an attribute are tied for the smallest number of previous occurrences, a selection is made at random. With the shortcut method, as well as with complete enumeration, an attempt is made to keep the concepts in any task as different from one another as possible (minimal overlap). When there is more than one less-frequently-used level for any attribute, an attempt is made to choose one that has been used least in the same task.

Designs composed using complete enumeration are of high quality, and those composed by the shortcut method are also quite acceptable.

Balanced Overlap Method:

This method is a middling position between the random and the complete enumeration strategies. It permits roughly half as much overlap as the random method. It keeps track of the co-occurrences of all pairs of attribute levels, but with a relaxed standard relative to the complete enumeration strategy in order to permit level overlap within the same task. No duplicate concepts are permitted within the same task.

The balanced overlap method takes about the same time to compute as complete enumeration and is slower than the random or shortcut methods.

Random Method:

The random method employs random sampling with replacement for choosing concepts. Sampling with replacement permits level overlap within tasks. The random method permits an attribute to have an identical level across all concepts, but it does not permit two identical concepts (on all attributes) to appear within the same task.

The random method computes about as quickly as the shortcut method. Unless the primary goal of the research is the study of interaction effects, we generally do not recommend using the purely Random method.

CBC's Design File

CBC automatically creates a design file that is

a) uploaded to the server if using web-based data collection,

b) is copied to other PCs or devices if using CAPI-based data collection, or

c) is used to generate a questionnaire file if using paper-based data collection.

During the process, you are asked to specify how many versions of the questionnaire should be written to that design file. For computer- or device-based interviewing, we generally recommend that you include at least 100 versions, as there are benefits to fielding many versions of the questionnaire. Optimally, each respondent would receive his/her own version of the questionnaire. However, there are diminishing returns and including more than about a dozen versions often is of very little to no additional practical benefit. For that reason, we limit the number of versions that can be placed in the design file to 999. If you have more than 999 respondents, this means that some respondents will by necessity receive the same designs. For all practical purposes, once there are so many unique versions of the questionnaire in the total design pool, this poses no harm.

Suggestions for Randomized Designs

Generally, we recommend asking about 8 to 15 choice tasks, though this recommendation may vary depending on the attribute list and sample size. We prefer random task generation (especially Balanced Overlap). We caution against questionnaires with no level overlap. If a respondent has a critical "must-have" level, then there is only one product per choice task that could satisfy him/her. One can accomplish some level overlap by simply displaying more product concepts than levels exist per attribute. But, the default "Balanced Overlap" design methodology ensures at least a modest amount of level overlap.

Another reason to include at least some degree of overlap in the CBC designs is when interaction terms are of interest. Overlap for an attribute can be added to a design simply by using more concepts than attribute levels in tasks.

In summary, we suggest using balanced overlap (or the complete enumeration/shortcut methods, if some level overlap can be added to the tasks by virtue of showing more concepts on the screen than there are levels per attribute) for main-effects only designs. If detecting and measuring interactions is the primary goal (and sample size is relatively large), then the purely random approach is favored. If the goal is to estimate both main effects and interactions efficiently, then overlap should be built into the design, at least for the attributes involved in the interaction. Using more concepts than attribute levels with complete enumeration, or utilizing the compromise balanced overlap approach would seem to be good alternatives.

Importing Other Designs

Some researchers will want to implement a specific design generated by a design specialist. You can import such designs into CBC.

Mixed Overlap Designs

Some researchers may want to force some attributes to have minimal overlap and other attributes to have some overlap (i.e. using Balanced Overlap). Imagine a situation in which the first attribute is to have minimal overlap (each level represented once per task) and the remaining attributes are to be designed using Balanced Overlap. The researcher could generate a design using all but the first attribute under Balanced Overlap, and export the design to a .csv file. Then, the researcher could modify the .csv file using Excel to insert an attribute in the first position where each level appears once per task. The last step is to import that new design into a CBC study that reflects all attributes.

One could use a similar approach to combine designs where multiple attributes were generated using Balanced Overlap and multiple attributes were generated using Complete Enumeration. Of course, care should be taken to test the design efficiency of the final design.