Suppose the attributes being studied describe automobiles, with these levels:

BODY TYPE

Two-Door Sedan

Four-Door Sedan

Hatchback

Minivan

Convertible

DRIVE CONFIGURATION

Rear-Wheel Drive

Front-Wheel Drive

All-Wheel Drive

ORIGIN

Made in USA

Made in Japan

Made in Europe

COLOR

Red

Blue

Green

Yellow

White

Black

PRICE

$18,000

$20,000

$22,000

$24,000

$26,000

The ACA interview has several sections, each with a specific purpose. We've provided examples of the questions in each section, together with brief explanations of their purposes.

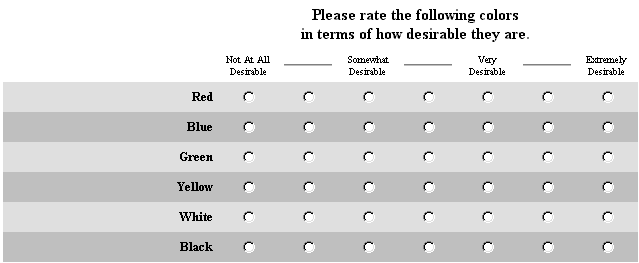

1) Preference for Levels (Required Section)

(The "ACA Rating" question type.)

First, the respondent rates the levels for preference. This question is usually omitted for attributes (such as price or quality) for which the respondent's preferences should be obvious. (When you input the attributes and levels, you can specify that the order of preference for levels is "best to worst" or "worst to best" and the ratings question is skipped for such attributes.) The screen may look like the following:

(The rating scale can be defined from 2 to 9 points. We suggest using at least 5 scale points. In any case, it is probably not wise to use fewer scale points than the number of levels in any one attribute for which the Rating question is asked.)

The respondent is required to check one radio button per attribute level. If a level is skipped, ACA will prompt the respondent to complete the question prior to moving to the next page in the interview.

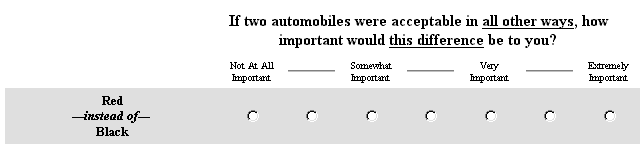

2) Attribute Importance (Optional Section)

(The "ACA Importance" question type.)

Having learned preferences for the levels within each attribute, we next determine the relative importance of each attribute to this respondent. This information is useful in two ways. First, it may allow us to eliminate some attributes from further evaluation if the interview would otherwise be too long. Second, it provides information upon which to base initial estimates of this respondent's utilities.

(Notes: if using ACA/HB for part-worth utility estimation, you may elect to omit the Importance section. If the standard format of the Importance question as described below doesn't fit your needs, you can also customize your own importance questions. Getting the Importance section right is critical. For example, we strongly recommend educating respondents regarding the full array of attribute options prior to asking them importance questions, so they use the scale consistently and know ahead of time which attribute(s) should be reserved to receive the highest scores.)

As a matter of fundamental philosophy, we do not ask about attribute importance with questions such as "How important is price?" The importance of an attribute is clearly dependent on magnitudes of differences among the levels being considered. For example, if all airline tickets from City A to City B were to cost between $100 and $101, then price couldn't be important in deciding which airline to select. However, if cost varied from $10 to $1000, then price would probably be seen as very important.

Our questioning is based on differences between those levels the respondent would like best and least, as illustrated:

(The rating scale can be defined from 2 to 9 points. We suggest using at least 4 scale points.)

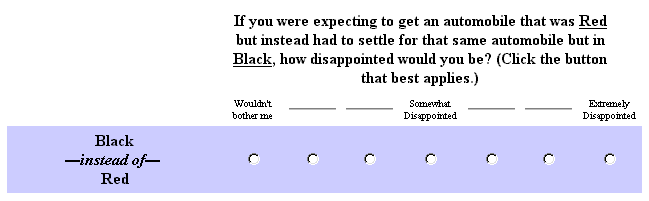

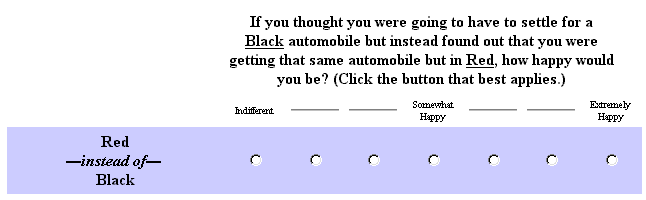

It is worth mentioning that there are different ways to phrase the "importance" question, and indeed referring to attribute "importance" is sometimes difficult for some respondents to comprehend. How the wording of this question should be phrased depends on the audience. Other possibilities with the ACA software include:

The "Regrets" Format:

The "Unexpected Windfall" Format:

At this point we have learned which attributes are most important for this respondent and which levels are preferred. From now on, the interview is focused on those most important attributes and combinations of the levels that imply the most difficult trade-offs.

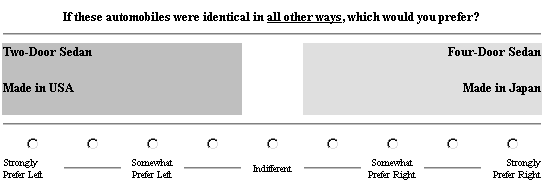

3) Paired-Comparison Trade-Off Questions

(The "ACA Pairs" question.)

Next, a series of customized paired-comparison trade-off questions is presented. Up to this point in the interview, we have collected "prior" information; no conjoint analysis has been involved. The Pairs section elicits the conjoint tradeoffs. In each case the respondent is shown two product concepts. The respondent is asked which is preferred, and also to indicate strength of preference. The example below presents concepts differing on only two attributes. Although concepts may be specified on up to five attributes, simple concepts like these present an easy task for the respondent, and are a useful way to begin this section of the interview.

(The rating scale can be defined from 2 to 9 points. We suggest using at least 7 scale points.)

The number of attributes appearing in each concept is specified by the author, and can be varied during the interview. Concepts described on more attributes have the advantage of seeming more realistic. It is also true that statistical estimation is somewhat more efficient with more attributes.

However, with more attributes, the respondent must process more information and the task is more difficult. Experimental evidence indicates that a tradeoff occurs: as the number of attributes in the concepts is increased, respondents are more likely to become confused. It appears best to start with only two attributes. Most respondents can handle three attributes after they've become familiar with the task. Preliminary evidence suggests that beyond three attributes, gains in efficiency are usually offset by respondent confusion due to task difficulty.

The computer starts with a crude set of estimates for the respondent's part-worths, and updates them following each submitted page (you can specify how many pairs questions will be completed per page). The crude estimates are constructed from the respondent's preference ranking or rating for levels, and ratings of importance of attributes. Each pairs question is chosen by the computer to provide the most incremental information, taking into account what is already known about this respondent's part-worths. The interview continues in this manner until a termination criterion (specified by the author) is satisfied.

Every time the respondent completes a page of pairs question(s), the estimate of the respondent's part-worths is updated. Updating the part-worths improves the quality of subsequent pairs questions. We strongly encourage you to place page breaks often throughout the pairs questions. If you can count on fast load times (respondents will be using fast web connections), we suggest page breaks after each pairs question.

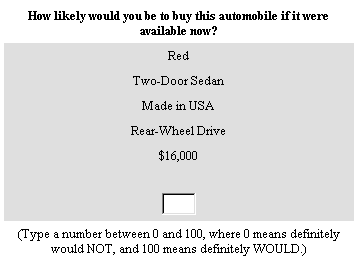

4) Calibration Concepts (Optional Section)

(The "ACA Calibration" question type).

Finally, the computer composes a series of "calibration concepts" using those attributes determined to be most important. These concepts are chosen to occupy the entire range from very unattractive to very attractive for the respondent. The respondent is asked a "likelihood of buying" question about each.

We first present the concept we expect the respondent to like least among all possible concepts, and the second is the one we expect to be liked best. Those two concepts establish a frame of reference. The remaining concepts are selected to have intermediate levels of attractiveness.

This information can be used to calibrate the part-worth utilities obtained in the earlier part of the interview for use in Purchase Likelihood simulations during analysis. Conjoint part-worths are normally determined only to within an arbitrary linear transformation; one can add any constant to all the values for any attribute and multiply all part-worths by any positive constant. The purpose of this section is to scale the part-worths non-arbitrarily, so that sums of part-worths for these concepts are approximately equal to logit transforms of the respondent's likelihood percentages.

The screen format is:

If you plan only to conduct share of preference simulations, and particularly if you are using the ACA/HB system for hierarchical Bayes estimation, there is no need to include calibration concepts in your ACA survey. See Estimating ACA Utilities for more information on the use of calibration concepts.

Setting Page Breaks in ACA Surveys

ACA lets you include multiple questions per page. If the page cannot fit on the respondent's screen, a scroll bar is provided for respondents to scroll down the page. At the bottom of each page is a "Next" button that respondents click when they have completed the current page. Page breaks must occur between each of the major ACA sections:

•Rating

•Importance

•Pairs

•Calibration Concepts

You may include additional page breaks within each section if you think it is more convenient for your respondents and provides a more desirable overall layout. We suggest you include page breaks within the Pairs section so that the utilities can be updated and the subsequent pairs have an opportunity to become more customized and better balanced. The number of pairs questions to include per page represents a tradeoff between the benefits of updating versus the time required to process and receive a new page of pairs questions over the respondent's Web connection.