Researchers across many disciplines face the common task of measuring the preference or importance of multiple items, such as brands, product features, employee benefits, advertising claims, etc. The most common (and easiest) scaling approaches have used rating, ranking, or chip allocation (i.e. constant sum tasks).

Example Rating, Ranking, and Allocation questions are shown below (this software does not employ any of these approaches, but we repeat them here in low-tech paper-and-pencil format for exposition):

Example Rating Task:

Please rate the following in terms of importance to you when eating at a fast food restaurant. Use a 10-point scale, where "0" means "not important at all" and "10" means "extremely important"

___ Clean bathrooms

___ Healthy food choices

___ Good taste

___ Reasonable prices

___ Has a play area

___ Restaurant is socially responsible

___ Courteous staff

Example Ranking Task:

Please rank (from most important to least important) the following in terms of importance to you when eating at a fast food restaurant. Put a "1" next to the most important item, a "2" next to the next most important item, etc.

___ Clean bathrooms

___ Healthy food choices

___ Good taste

___ Reasonable prices

___ Has a play area

___ Restaurant is socially responsible

___ Courteous staff

Example Allocation Task:

Please tell us how important the following are you when eating at a fast food restaurant. Show the importance by assigning points to each attribute. The more important the attribute, the more points you should give it. You can use up to 100 total points. Your answers must sum to 100.

___ Clean bathrooms

___ Healthy food choices

___ Good taste

___ Reasonable prices

___ Has a play area

___ Restaurant is socially responsible

___ Courteous staff

Total: ______

There are a variety of ways these questions may be asked today, including the use of grid-style layout with radio buttons and drag-and-drop for ranking. And, it is commonplace to measure many more items than the seven shown here. (These examples are not intended to represent the best possible wording, but are meant to be representative of what is typically used in practice.)

The common approaches (rating, ranking, and allocation tasks) have weaknesses.

•Rating tasks assume that respondents can communicate their true affinity for an item using a numeric rating scale. Rating data often are negatively affected by lack of discrimination among items and scale use bias (the tendency for respondents to use the scale in different ways, such as mainly using the top or bottom of the scale, or tending to use more or fewer available scale points.)

•Ranking tasks become difficult to manage when there are more than about seven items, and the resulting data are on an ordinal scale only.

•Allocation tasks are also challenging for respondents when there are many items. Even with a manageable number of items, some respondents may have difficulty distributing values that sum to a target value. The mechanical task of making the allocated points sum to the target amount may interfere with respondents revealing their true preferences.

Researchers seek scaling approaches that feature:

•Ease of use for respondents from a variety of educational and cultural backgrounds

•Strong discrimination among the items

•Robust scaling properties (ratio-scaled data preferred)

•Reduction or elimination of scale use bias

A very old approach, the Method of Paired Comparisons (MPC), seems to perform well on all these requirements. With MPC, respondents are shown items, two at a time, and are asked which of these two they prefer most (or which is most important, etc.).

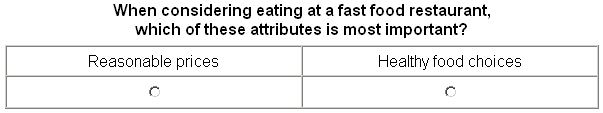

Example Paired Comparison Task:

The respondent is not permitted to state that all items are equally preferred or important. Each respondent is typically asked to evaluate multiple pairs, where the pairs are selected using an experimental plan, so that all items have been evaluated by each respondent across the pairs (though typically not all possible pairings will have been seen) and that each item appears about an equal number of times.

It should be noted that MPC can be extended to choices from triples (three items at a time), quads (four items at a time) or choices from even larger sets. There would seem to be benefits from asking respondents to evaluate three or more items at a time (up to some reasonable set size). One research paper we are aware of (Rounds et al. 1978) suggests that asking respondents to complete sets of from 3 to 5 items produces similar results as pairs (in terms of parameter estimates), and respondents may prefer completing fewer sets with more items rather than more sets with just two items.

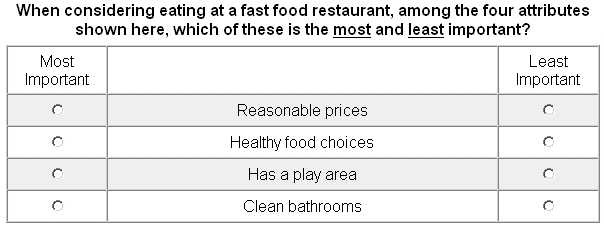

A much newer technique, MaxDiff, may perform even better than MPC, especially in terms of overall question efficiency. MaxDiff questionnaires ask respondents to indicate both the most and least preferred/important items within each set.

Example MaxDiff Task:

Interest in MaxDiff has blossomed recently, and papers on MaxDiff have won "best presentation" awards at recent ESOMAR and Sawtooth Software conferences.